Program

Timeline

08:00 – 08:45 AM Registration and Coffee/Pastries

08:45 – 09:00 AM Introduction

09:00 – 10:15 AM Session #1 – The Need for Speed

10:15 – 10:30 AM Morning break

10:30 – 11:20 AM Session #2 – How to Avoid Pulling Your Hair Out

11:20 – 11:35 AM Morning break

11:35 – 12:25 PM Session #3 – Pipelines, In the Cloud and Anywhere

12:25 – 02:00 PM Lunch

02:00 – 03:00 PM Keynote

03:00 – 03:10 PM Afternoon break

03:10 – 04:25 PM Session #4 – Managing Complexity in Images

04:25 – 04:40 PM Afternoon break

04:40 – 05:30 PM Session #5 – Flashy Things, and How to Get Rid of Them

05:30 – 06:00 PM Conclusion

06:00 – 08:00 PM Reception

Program

Session #1: The Need for Speed

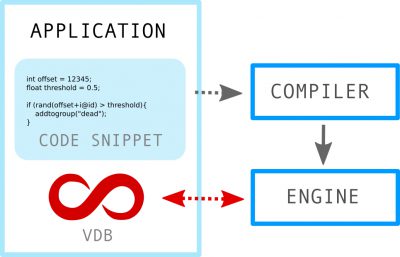

A JIT Expression Language for Fast Manipulation of VDB Points and Volumes

Nick Avramoussis, Richard Jones, Francisco Gochez, Todd Keeler, Matt Warner (DNEG)

Almost all modern digital content creation (DCC) applications used throughout visual effects (VFX) pipelines provide a scripting or programming interface. This useful feature gives users the freedom to create and manipulate assets in bespoke ways, providing a powerful and customizable tool for working within the software. It is particularly useful for working with geometry, a process heavily involved in modelling, effects and animation tasks. However, most widely available examples of these are either confined to their host application or ill-suited to computationally demanding operations. We have created an efficient programming interface built around the open-source geometry format, OpenVDB, to allow fast geometry manipulation whilst offering the required portability for use anywhere in the VFX pipeline.

LibEE 2 – Enabling Fast Edits and Evaluation

Stuart Bryson, Esteban Papp (DreamWorks)

The Premo animation platform developed by DreamWorks utilized LibEE v1 for high performance graph evaluation. The animator experience required fast evaluation, but did not require fast editing of the graph. LibEE v1, therefore, was never designed to support efficient edits. This talk presents an overview of how we developed LibEE v2 to enable fast editing of character rigs while still maintaining or improving upon the speed of evaluation. Overall, LibEE v2 achieves a 100x speedup of authoring operations compared with LibEE v1.

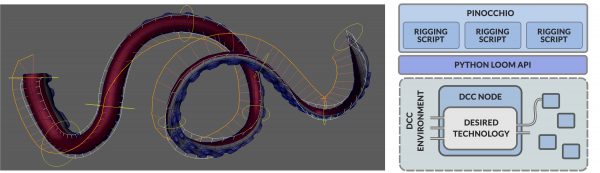

Abstracting Rigging Concepts for a Future Proof Framework Design

Jesus R. Nieto, Charlie Banks, Ryan Chan (DNEG)

Several years ago DNEG set out to build Loom, a new rigging framework in a bid to improve the performance of our Maya animation rigs. This talk is an update on its development in the light of the discontinuation of its original evaluation back-end, Fabric Engine. In particular, we describe the design choices which enabled us to achieve a DCC agnostic rigging framework, allowing us to focus on development of pure rigging concepts. Also how this setback prompted us to extend the framework to properly deal with the deformation side of rigs, targeting memory efficiency, GPU/CPU memory interaction and high-end performance optimizations.

Session #2 – How to Avoid Pulling Your Hair Out

Layering Changes in a Procedural Grooming Pipeline

Curtis Andrus (MPC)

Due to MPC’s departmental and multi-site nature, there is an increasing need from artists to make changes to grooms at different points in the pipeline. We describe a new system we’ve built into Furtility (MPC’s in-house grooming tool) to support these requests, providing a powerful tool for non-destructively layering changes on top of a base groom description.

Merging Procedural and Non-procedural Hair Grooming

Gene Lin, Elena Driskill, Dan Milling, Giorgio Lafratta, Doug Roble (Digital Domain 3.0)

Procedural workflows are widely used for creating hair and fur on characters in the visual effects industry, usually in the form of a node-based system. While they are able to create hairstyles with great variety, procedural systems often need to be combined with other external, non-procedural tools to achieve sophisticated, art-directed shapes. We present a new hair-generating workflow, which merges the procedural and non-procedural components for grooming and animating hairs. This new workflow is now a vital component of our character effects pipeline, expediting the process of handling fast-paced projects.

Session #3: Pipelines, In the Cloud and Anywhere

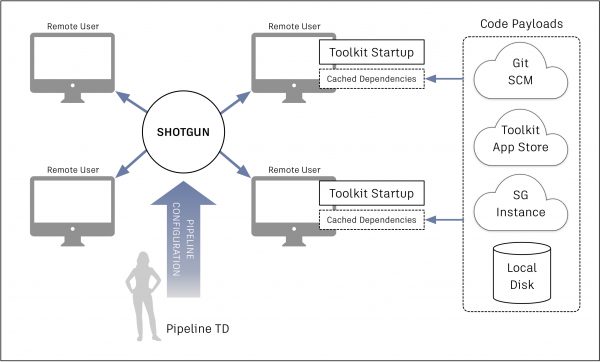

Cloud-based Pipeline Distribution for Effective and Secure Remote Workflows

Manne Öhrström, Josh Tomlinson(Shotgun Software); Rudy Cortes, Satish Goda(Pearl Studio)

We present a secure, cloud based distribution system for just-in-time artistworkflows built on the Shotgun Toolkit platform. We cover the original motivations behind this work, the challenges faced, and the lessons learned as the technology has come to unlock new patterns for managing where and how artists contribute on production. A case study of the technology and its use on production by Pearl Studio is included, showing how the company uses the system to meet their distributed organizational needs and why adoption has been beneficial for their technological and business goals.We show how the system began as a means of downloading and caching individual pipeline components via an app store, before organically evolving into a distribution mechanism for a studio’s entire pipeline. We include real-world examples of these patterns that are in use by Toolkit clients and illustrate how this technology can be applied to cloud-based collaboration in a variety of ways.

Genesis: A Pipeline for Virtual Production

Robert Tovell, Nina Williams (MPC)

Genesis, MPC’s new Virtual Production platform, offers filmmakers full multi-user collaboration and live manipulation of sets and characters, while offering high quality real-time renders with special attention to lighting. In addition, it provides VR and AR enabled tools for a more immersive experience for the Director and enhanced on-set workflows. Virtual Production at MPC came with a new set of requirements from a VFX pipeline, and this document aims to demonstrate how we revisited the current MPC Pipeline and workflows to accommodate both the pace of Virtual Production, as well as catering for the individual requirements coming from each of the large-scale projects at MPC in need of Virtual Production. We needed to meet certain expectations that are fulfilled by the current pipeline at the same time as both improving and adapting it to best work with the demands of Virtual Production.

Session #4: Managing Complexity in Images

Just Get on With It: A Managed Approach to AOV Manipulation

Colin Alway, Patrick Nagle, Gregory Keech (DNEG)

It is 5 pm and you have just finished addressing the notes on your comp of the giant alien robot ship explosion and are looking forward to the end of the work day. Then out of nowhere your supervisor comes in and says “The client called….they want a big change to the look of the CG….and they want it now”. Depending how the compositor managed their script, addressing the changes can range from mild discomfort to excruciating pain. Many of these problems come from the way that AOVs (Arbitrary Output Variables) are managed when grading CG. We present a system using a node based graph in a novel way to manipulate AOVs to maintain mathematical continuity which can be shared between CG renders. This approach normalizes the complexity of changing the grading on a CG render allowing the artist to focus on the task at hand and not juggling of complex channel arithmetics.

A Scheme for Storing Object ID Manifests in OpenEXR Images

Peter Hillman (Weta Digital Ltd)

There are various approaches to storing numeric IDs as extra channels within CG rendered images. Using these channels, individual objects can be selected and separately modified. To associate an object with a text string a table or manifest is required mapping numeric IDs to text strings. This allows readable identification of ID-based selections, as well as the ability to make a selection using a text search. A scheme for storage of this ID Manifest is proposed which is independent of the approach used to store the IDs within the image. The total size of the raw strings within an ID Manifest may be very large but often contains much repeated information. A novel compression scheme is therefore employed which significantly reduces the size of the manifest.

Recolouring Deep Images

Rob Pieké, Yanli Zhao, Fabià Serra Arrizabalaga (MPC Shadow Lab)

This work describes in-progress research to investigate methods for manipulating and/or correcting the colours of samples in deep images. Motivations for wanting this include, but are not limited to: a preference to minimise data footprints by only rendering deep alpha images, better colour manipulation tools in Nuke for 2D (i.e., not-deep) images, and post-render denoising. The most naïve way to (re)colour deep images with 2D RGB images is via Nuke’s DeepRecolor. This effectively projects the RGB colour of a 2D pixel onto each sample of the corresponding deep pixel. This approach has many limitations: introducing halos when applying depth-of-field as a post-process, and edge artifacts where bright background objects can “spill” into the edges of foreground objects when other objects are composited between them. The work by Egstad et al. on OpenDCX is perhaps the most advanced we’ve seen presented in this area, but it still seems to lack broad adoption. Further, we continued to identify other issues/workflows, and thus decided to pursue our own blue-sky thinking about the overall problem space. Much of what we describe may be conceptually easy to solve by changing upstream departments’ workflows (e.g., “just get lighting to split that out into a separate pass”, etc), but the practical challenges associated with these types of suggestions are often prohibitive as deadlines start looming.

Session #5: Flashy Things, and How to Get Rid of Them

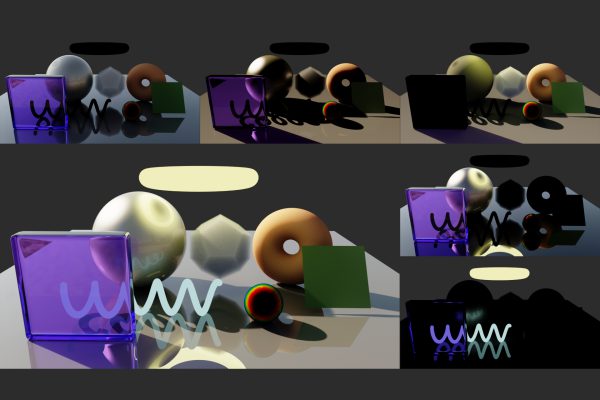

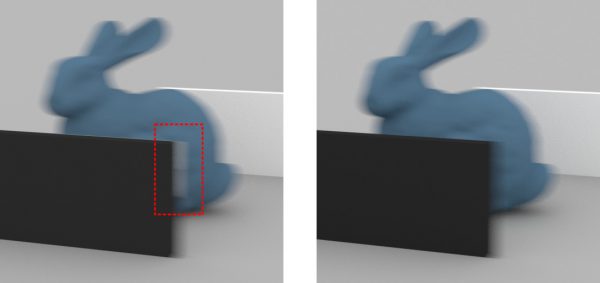

Firefly Detection with Half Buffers

Keith Jeffery (DreamWorks)

Fireflies, or noise spikes, are overly-bright pixels out of place compared to neighboring pixels, which are a common artifact in Monte Carlo ray traced images. They arise from low-probability events, and would be resolved in the limit as more samples are taken. However, these statistical anomalies are often so far out of the expected range that the time for them to converge, even barring numerical instabilities, is prohibitive. Aside from the general problem of fireflies marring a rendered image, their difference in color and variance values can cause problems for denoising solutions. For example, the distance calculation for non-local means filtering presented in Rousselle et al. [2012] is not robust under extreme differences in variance. This paper addresses removing these fireflies to improve both the rendered image on its own, and making the available data more uniform for denoising solutions. This paper assumes a denoising framework that makes use of half buffers and pixel variance, such as set forth in Rousselle et al. [2012] and Bitterli et al. [2016]. The variance provides better data than the color channels for determining which pixels do contain fireflies, whereas the half buffers provide some assurance that the detected firefly is not an expected highlight in the rendered image.

Into the Voyd: Teleportation of Light Transport in Incredibles 2

Patrick Coleman, Darwyn Peachey, Tom Nettleship, Ryusuke Villemin, Tobin Jones (Pixar)

In Incredibles 2, a character named Voyd has the ability to create portals that connect two locations in space. A particular challenge for this film is the presence of portals in a number of fast-paced action sequences with multiple characters and objects passing through them, causing multiple views of the scene to be visible in a single shot. To enable the production of this effect while allowing production artists to focus on creative work, we’ve developed a system that allows for the rendering of portals while solving for light transport inside a path tracer, as well as a suite of interactive tools for creating shots and animating characters and objects as they interact with and pass through portals. In addition, we’ve designed an effects animation pipeline that allows for the art–directible creation of boundary elements that allow artists to clearly show the presence of visually distinctive portals in a number of fast-paced action sequences.